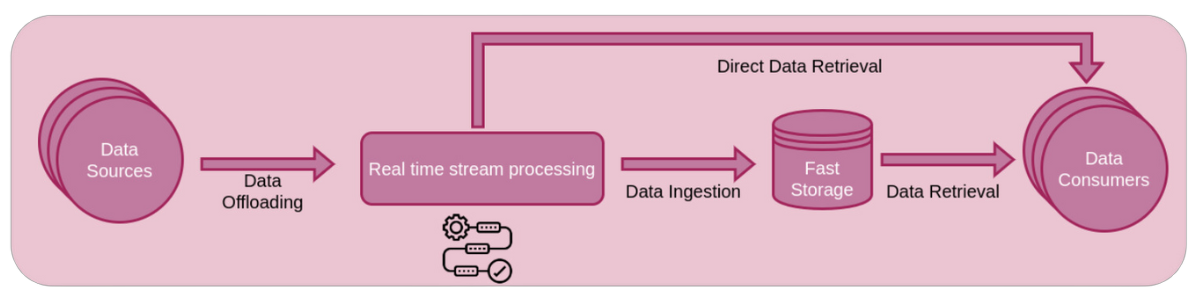

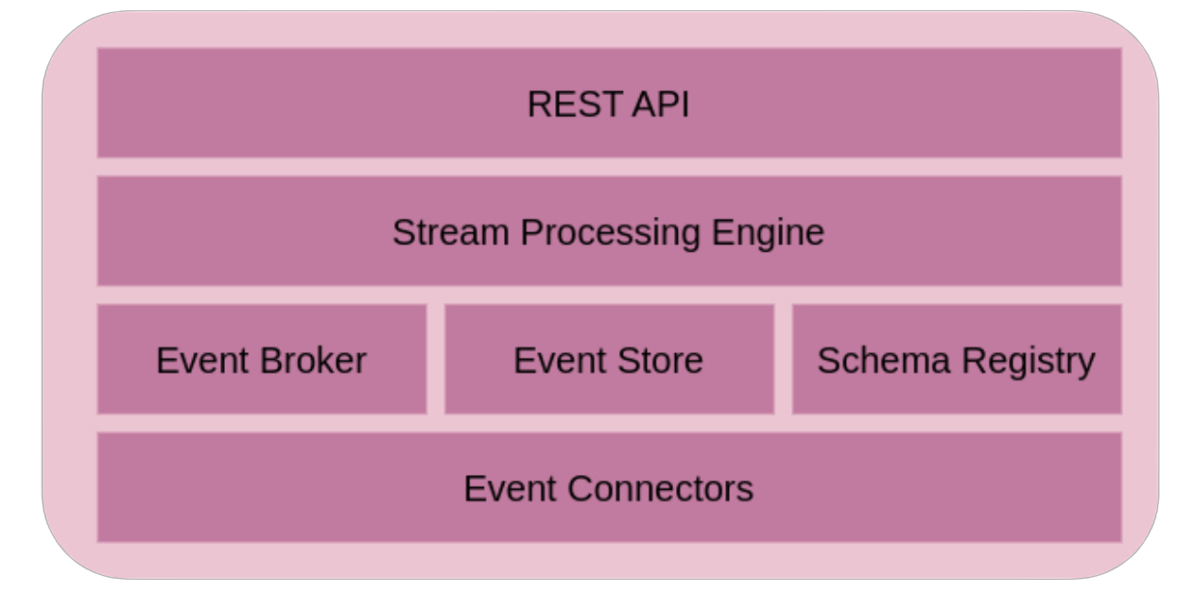

In various business sectors, the speed of decision-making processes is increasingly crucial for the organization’s profit. By leveraging continuous monitoring of core business performance based on real-time data analysis, management can make timely decisions to maximize revenues from various channels and mitigate losses due to incorrect initiatives or external factors.

For digital-native companies, real-time analysis of phenomena such as customer behavior in interactions with touchpoints allows for rapid improvement in the effectiveness of automated user suggestions, increasing engagement, and enhancing the overall service experience. Conversely, the lack of timeliness in adapting or correcting a digital initiative toward the end customer can lead to a loss of attractiveness and trust in the service, resulting in potential revenue losses or subscription cancellations.

The ability to have real-time visibility into business performance can also be a competitive advantage for companies in more traditional sectors, such as retail, enabling them to launch marketing initiatives or promotional campaigns much more quickly than in the past, especially during annual sales events or special occasions.

Furthermore, the capacity to process domain events in real-time is often a necessary requirement to support the operational aspects of the business itself, as in the case of fraud prevention functionalities for banking institutions in online banking activities or real-time inventory calculations for multichannel retail companies.