In our Data Strategy proposal, the adoption of cloud technologies has increasingly taken on a predominant role in the solutions provided to clients. As explained in detail in the Use Case “Migrate to Cloud,” this proposal is structured into four distinct phases:

- Assessment

- Foundation

- Mobilization

- Execution

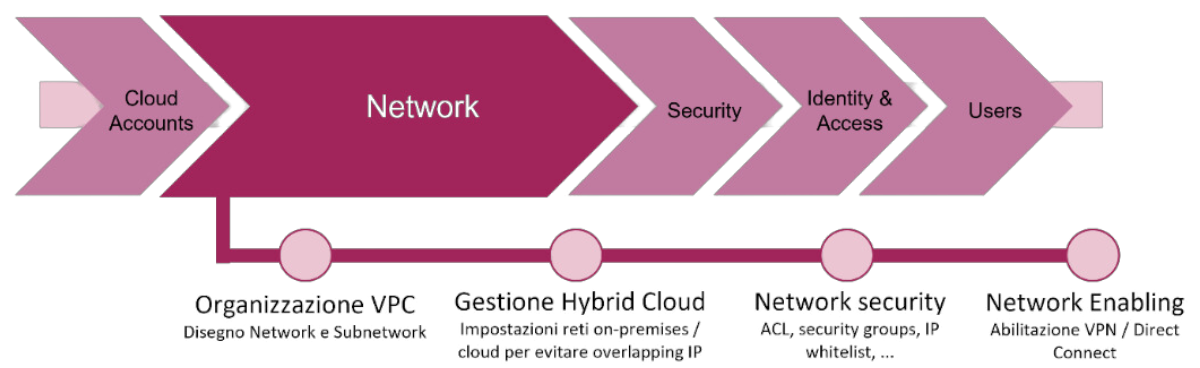

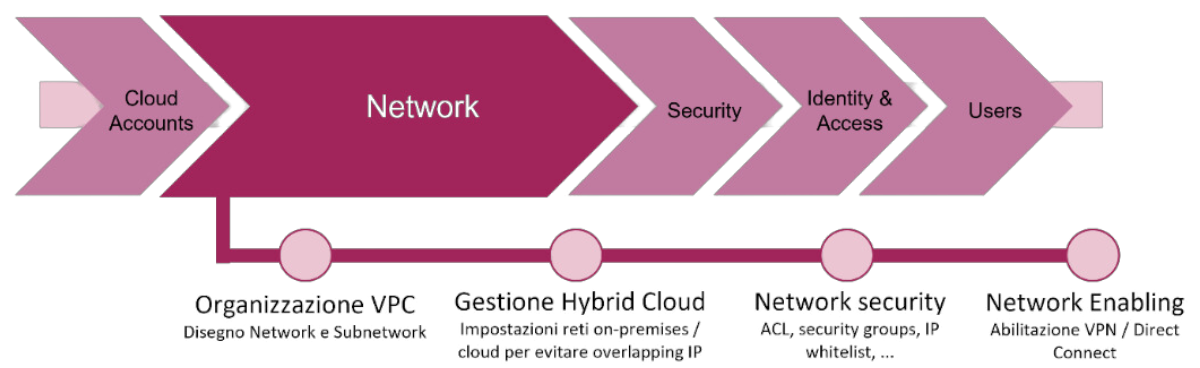

It is during the Foundation phase that we explore and implement the best networking strategies for the specific use cases of our clients. In particular, what is called the Landing Zone is implemented on the chosen cloud provider.

The Landing Zone serves as the central hub for cloud management and allows the definition of various aspects within it:

Each of the steps highlighted in the image is implemented by leveraging the principles of Infrastructure as Code (IaC), generating scripts in which various infrastructure components and their configurations are explicitly defined in a declarative manner.

Once the IaC tool is chosen (for example, Terraform, especially in multi-cloud contexts), the created scripts enable precise management of all infrastructure components, including management services in the Network domain.

VPC Organization

Virtual Private Clouds (VPCs) are one of the key components within a cloud infrastructure. They allow isolating resources within a virtualized environment, ensuring greater security and flexibility. Properly designing and organizing one or more VPCs is crucial to maximize their benefits.

When designing a VPC, it is essential to plan the allocation of IP address spaces correctly using CIDR blocks (Classless Inter-Domain Routing). This involves dividing IP addresses into blocks that reflect scalability needs and the logical subdivision of resources.

A primary feature of VPCs is the ability to create one or more subnets within them, specifying the CIDR block within that defined for the VPC itself. This allows for an additional hierarchical level in the organization of resources and the application of security policies and general networking management.

Single VPC

The simplest topology to use is the single VPC, where all created cloud resources reside within it. This scenario is suitable for feasibility studies on cloud provider services, development environments, or small applications with minimal security requirements.

Even in the case of a feasibility study, it is recommended to have resources in at least two VPCs, testing configurations necessary to integrate and enable communication between the two VPCs. This way, all potential issues that could arise in production environments can be anticipated early on.

Multiple VPCs

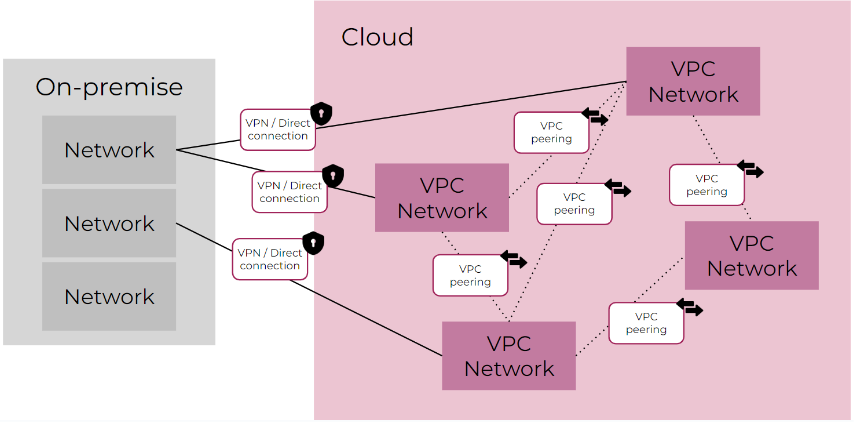

This topology involves creating multiple separate VPCs within the same cloud account. Each VPC functions as an isolated entity, with the possibility to configure connections between them. This topology is ideal for separating production and development environments or hosting applications with different security requirements.

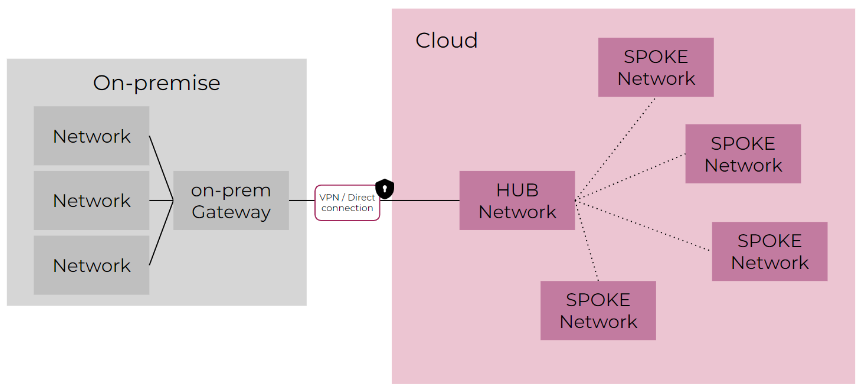

In the case of multiple VPCs, leveraging services provided by various cloud providers allows for different architectures. For example, central VPCs, known as “Hubs,” can act as network managers for other satellite VPCs, called “Spokes.” Alternatively, there can be Transit VPCs to which other VPCs can connect to communicate with each other.

The following paragraph will delve into the concepts of Point-to-Point and Hub & Spoke in more detail, including on-premise network management, defining the hybrid cloud management model.